Full Stack Observability is a term you may have heard being tossed around in many conversations around observability or monitoring in general. But what does it mean? What is the Data Fabric way and why does your business need it? Let’s explore.

The goal of Full Stack Observability is to provide a holistic view of the entire software stack, allowing teams to proactively identify and resolve issues, optimize performance, and improve user experience. It enables effective troubleshooting, root cause analysis, and proactive performance optimization by monitoring and analyzing data from multiple layers of the stack, including infrastructure, application metrics, logs, traces, and user interactions.

What is Full Stack Observability?

Full Stack Observability is a comprehensive approach to monitoring and understanding the performance and behavior of all components within a software system, including infrastructure, application code, and user experience. It involves collecting, analyzing, and correlating data from various sources to gain deep insights into the system’s health, performance, and potential issues.

To illustrate, let’s consider the case of an e-commerce website. With Full Stack Observability, we can monitor and analyze various aspects of the system, including:

- Infrastructure Monitoring: Monitoring the underlying infrastructure, such as servers, databases, and network components, provides insights into resource utilization, latency, and availability. For example, monitoring CPU usage, memory utilization, and disk I/O can help identify performance bottlenecks or capacity issues.

- Application Performance Monitoring (APM): APM tools provide detailed visibility into the application code, tracing requests as they flow through the system. It helps identify slow-performing components, inefficient database queries, or errors in the code. For example, tracking response times, database query performance, and error rates can help pinpoint areas for improvement.

- Log Management: Collecting and analyzing logs from different parts of the system, including application logs, server logs, and database logs, can reveal valuable insights. Logs can help troubleshoot errors, identify security breaches, and track user activity. For instance, analyzing logs can help detect abnormal login attempts, identify patterns leading to system failures, or track user actions during a specific period.

- Distributed Tracing: Distributed tracing allows tracking individual requests as they traverse through different microservices or components. It provides end-to-end visibility, enabling an understanding of dependencies, performance bottlenecks, and latency in the system. For example, tracing a user’s request from the front end to the backend services can help identify slow database queries or excessive network latency.

- User Experience Monitoring: Monitoring user interactions and measuring metrics like page load times, click-through rates, and conversion rates provides insights into user satisfaction and overall system performance. For example, tracking user session durations, page load times, and conversion rates can help optimize the user experience and identify areas for improvement.

Active Observability constitutes having visibility into all layers of your technology stack. Collecting, correlating, and aggregating all telemetry in the components provides insight into the behavior, performance, and health of the system. A well-designed Active observability strategy with the right platform can not only provide forensics but also help avoid potential problems by forecasting them ahead of time.

In order to achieve this, you need data from all layers of the technology stack in order to get a complete view of the health and performance of your system. This is where MELT comes in. MELT is the set of components that give you data from all layers of the technology stack, allowing you to have Active observability.

What is MELT?

MELT is an acronym for:

- (M)etrics: Data that represents the performance of your system. This can be things like response times, CPU utilization, or memory usage.

- (E)vents: Data that represent significant changes in state in your system. This can be things like application restarts, deployments, or errors.

- (L)ogs: Data that represents the actions taken by your system. This can be things like access logs, application logs, or database queries.

- (T)races: Data that represents the flow of a request as it goes through your system. This can be used to diagnose performance issues or identify errors.

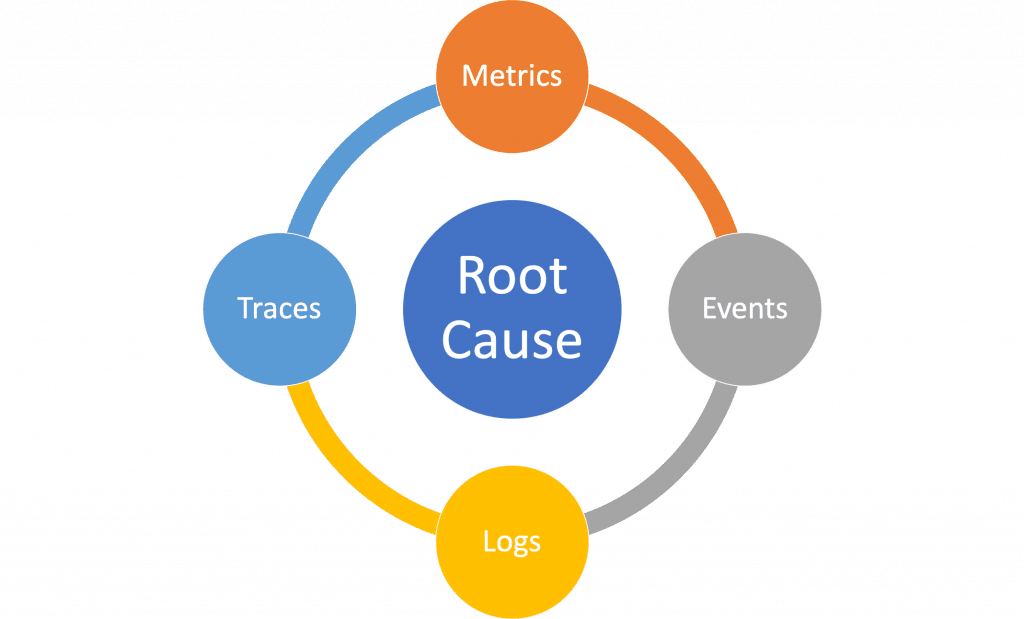

MELT and Root Cause Analysis

Now that we understand MELT, let us see how root cause analysis needs MELT. Root cause analysis is the process of identifying the underlying cause of a problem. For example lets us say you have an application showing a performance degradation say and API showing a slower response time. In order to root the cause, you first need a way to see that the API is running slow. This is where metrics play a role. By looking at the metrics of the API, you can see that the response time has increased.

But this is not enough to get to the root cause, you need to understand why the API is running slow. This is where traces come in. Traces show you the flow of a request as it goes through your system. By looking at the trace data, you can see that the slowdown is happening at the database query.

Now that you know where the problem is, you need to understand why the database query is slow. This is where logs come in. By looking at the logs of the database query, you can see that there was a recent change to the schema that is causing the slowdown.

Events also help in this process. By raising an event when the database connectivity fails, you can create an alert to notify you when this happens.

Thus, MELT is essential for root cause analysis. But is Active observability enough with MELT? Does MELT address all of your needs? What if you need the data available for other consumers? How do you know the data you are collecting is relevant and optimal? How do you handle compliance? There are many other things to consider as well. This is where an Active Observability or Operational Data Fabric comes in.

But first, let’s have a brief overview of what a Data Fabric is about.

What is a Data Fabric?

Data Fabric is a strategic framework that enables businesses to seamlessly integrate, manage, and analyze data from various sources in real time, harnessing the capabilities of AI and ML. It serves as a vital link between IT teams and business professionals, fostering collaboration and driving organizational growth.

Put simply, Data Fabric is an architectural approach to data management that provides a unified view of an organization’s data, regardless of where it is stored. By implementing Data Fabric, organizations gain enhanced efficiency in accessing, managing, and sharing their data, irrespective of its storage location.

In essence, a data fabric architecture empowers businesses to consolidate, govern, and orchestrate data effortlessly, resulting in improved data availability and accessibility.

The Key Capabilities of Data Fabric include:

- Data integration and unification

- Data governance and security

- Data processing and analytics

- Data storage and optimization

- Scalability and performance

From MELT to an Operational Full Stack Observability Data Fabric

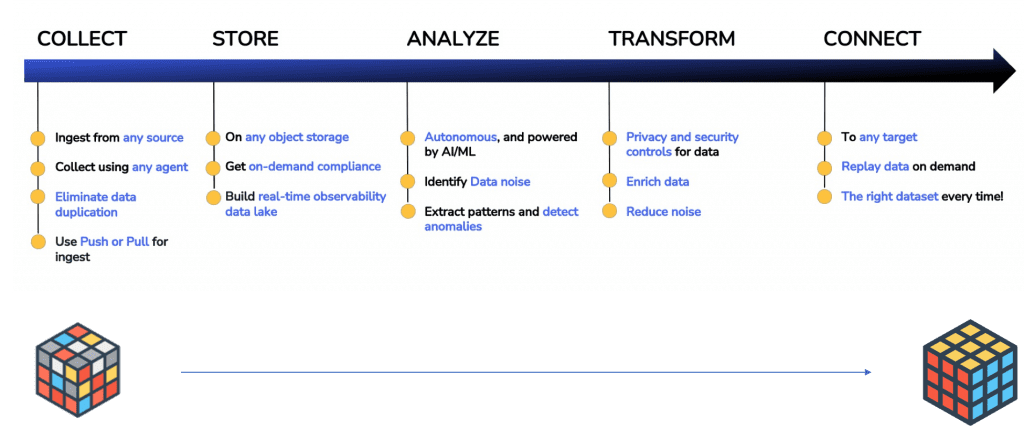

An Active Operational Data Fabric is a platform that allows you to not only collect, store, and query data from all layers of your technology stack but also provides you with controls to transform and connect your data to consumers on demand. Data sources could be any component that generates data like cloud services, virtual machines, Kafka streams, networked devices, etc.

The key to an Active observability data fabric is to create an observability data lake first which is the master repository of all your data. This data lake should be able to ingest data from all sources with minimal transformation and provide you with the ability to query this data in real time.

From this observability data lake, you can then connect your data to consumers on-demand using a variety of methods such as streaming, batch jobs, or API calls. This allows you to not only provide observability data to the consumers that need it but also gives you the ability to control how this data is used.

Understanding Data Control

Data control is an important part of any observability strategy. Data control includes things such as data filtering, data augmentation, volume reduction, control of license spending, and rapid control for flexible data retention on demand. e.g. some parts of your observability data may need to be retained for 30 days while others for 1 year.

Let us look at a few other examples of data control. Data filtering is the process of removing sensitive or unwanted data from your observability data before it is made available to consumers. This can be done using a variety of methods such as whitelisting, blacklisting, or user-defined filters. Data augmentation or enrichment is the process of adding additional context to your observability. There are many more components to it.

Here’s an infographic that can help you visualize the various aspects and benefits of implementing an observability data fabric for your organization.

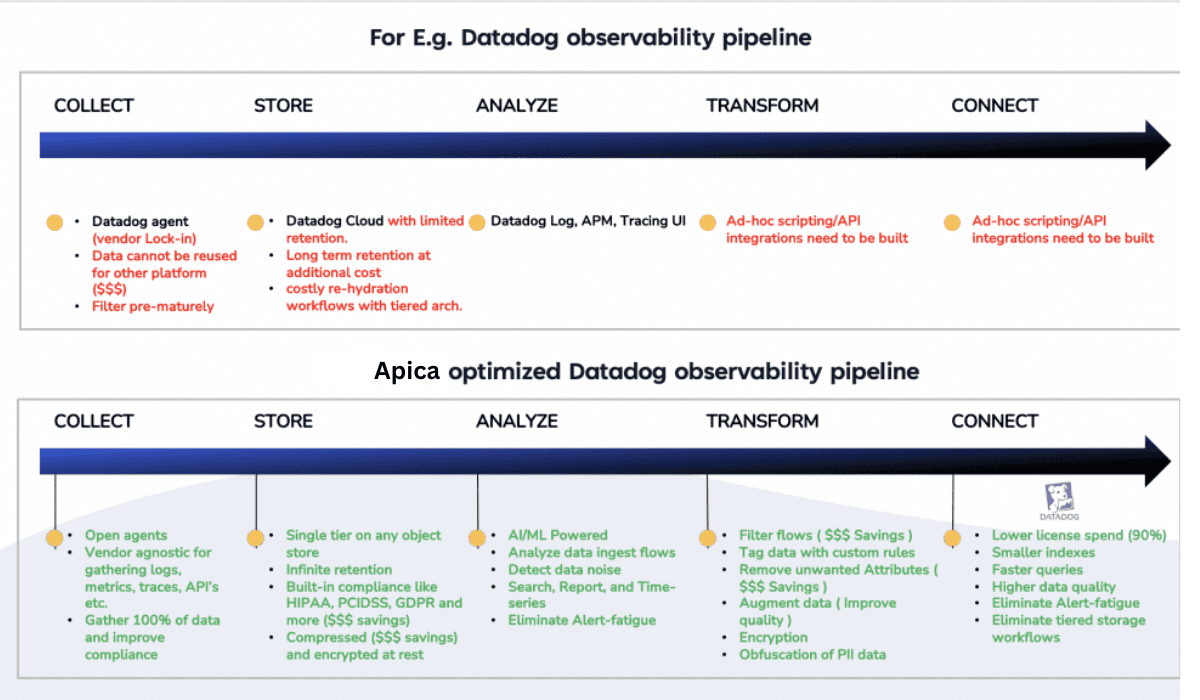

Let us see how this can help with your observability implementations. Let us take an example of a Datadog observability implementation. How can an observability data fabric help with Datadog?

The same gains can be realized by using the apica.io observability data fabric with many vendor solutions such as Splunk, NewRelic, and Elastic to name a few.

An Active Observability Data Fabric provides you with a complete view of your system by collecting data from all layers of your technology stack and making this data available to consumers on demand. This allows you not only to root cause problems quickly but also to prevent problems from happening in the first place.

Why do Businesses need Full Stack Observability?

In the modern landscape, businesses need Full Stack Observability for the following reasons:

1. Comprehensive Visibility: Provides a holistic view of an application’s components, dependencies, and performance metrics. This enables IT teams to gain deep insights into the behavior and health of the entire application ecosystem, facilitating better decision-making and problem-solving.

2. Collaboration and Breaking Silos: Breaks down operational silos by aligning IT teams around a shared context. This promotes collaboration and eliminates finger-pointing, resulting in improved incident response time and reduced application downtime.

3. Prioritizing Impactful Issues: Allows IT teams to prioritize issues based on their impact on user experience and business outcomes. By correlating application performance with user satisfaction and revenue generation, businesses can focus their efforts on addressing the most critical issues first, maximizing customer loyalty and financial success.

4. Proactive Problem Resolution: Goes beyond traditional monitoring tools by leveraging AI and ML solutions to proactively detect and diagnose issues. This proactive approach helps IT teams identify and resolve problems before they impact performance, minimizing disruptions for users and customers and ensuring smooth operations.

Therefore, in the dynamic and complex modern landscape, Full Stack Observability empowers businesses to gain comprehensive insights, foster collaboration, prioritize effectively, and proactively address issues, ultimately enabling them to deliver exceptional experiences and drive success.

Why choose Data Fabric?

In the face of overwhelming data volumes, businesses constantly grapple with the challenge of effectively managing and analyzing vast amounts of data. Data Fabrics emerge as a practical solution for data collection, processing, and analysis in the current scenario.

Data Fabric offers several advantages for businesses, accelerating their digital transformation and streamlining operations:

1. Efficient Data Management and Digital Transformation: Data Fabrics are highly scalable, enabling businesses to seamlessly handle data growth while keeping pace with technological advancements. This scalability ensures that businesses remain competitive in their respective industries.

2. Collaboration and Breaking Silos: The data fabric approach promotes collaboration and breaks down silos across different departments. This fosters improved organizational efficiency, faster decision-making, and heightened productivity.

3. Real-time Insights: By providing real-time insights into data, Data Fabric empowers businesses to make data-driven decisions swiftly and stay ahead in today’s competitive landscape.

4. Streamlined Data Management Efforts: Data Fabric delivers significant value through its analytical capabilities, reducing data management efforts by up to 70%. This acceleration in value delivery translates into a better return on investment (ROI).

5. Automation: Data Fabric offers automation and collaboration opportunities, allowing businesses to automate data governance, engineering, and protection tasks. This automation saves time and effort while ensuring data management accuracy and consistency.

In summary, Data Fabric presents a comprehensive solution for businesses to effectively manage their data, accelerate digital transformation, foster collaboration, gain real-time insights, streamline data management efforts, and leverage automation opportunities.

The Bottomline: How Apica Helps

The adoption of data fabric is imperative for businesses seeking to overcome data management challenges and unlock the full potential of their data.

By breaking down data silos and providing a centralized platform for data management, businesses can address limited data access and integrate multiple data sources effectively. With streamlined data integration and automation of ETL processes, a data fabric reduces complexities and minimizes the risk of errors.

The result is improved data accessibility, integration, and analysis, leading to better decision-making and enhanced business outcomes.

Apica’s Operational Data Fabric exemplifies the power of this approach, offering simplified data integration, real-time data processing, robust data governance, scalability, and flexibility.

As the digital landscape evolves, businesses that leverage data fabric implementations will be well-positioned for success and accelerate digital transformation.

Harnessing the capabilities of a data fabric is a strategic move that can drive operational optimization, cost reduction, and improved efficiency for businesses in the modern era.

So why wait? Sign up today and elevate the Full Stack Observability game for your business with the power of Apica’s Operational Data Fabric.