- Platform

Fleet

Fleet Management transforms the traditional, static method of telemetry into a dynamic, flexible system tailored to your unique operational needs. It offers a nuanced approach to observability data collection, emphasizing efficiency and adaptability.

FLEET management

100% Pipeline control to maximize data value. Collect, optimize, store, transform, route, and replay your observability data – however, whenever and wherever you need it.

100% Pipeline control to maximize data value. Collect, optimize, store, transform, route, and replay your observability data – however, whenever and wherever you need it.Capabilities

Apica’s data lake (powered by InstaStore™), a patented single-tier storage platform that seamlessly integrates with any object storage. It fully indexes incoming data, providing uniform, on-demand, and real-time access to all information.

Apica’s data lake (powered by InstaStore™), a patented single-tier storage platform that seamlessly integrates with any object storage. It fully indexes incoming data, providing uniform, on-demand, and real-time access to all information.Capabilities

The most comprehensive and user-friendly platform in the industry. Gain real-time insights into every layer of your infrastructure with automatic anomaly detection and root cause analysis.

- Resources

Apica Ascent Freemium Launch

Events & Webinars

Join us for live and virtual events featuring expert insights, customer stories, and partner connections. Don’t miss out on valuable learning opportunities!

Videos

Dive into valuable discussions and get to know our company through exclusive video content.Who is Apica?

Apica Ascent Freemium

Free Enterprise-Grade Telemetry Data Management and Observability is Here: Introducing Apica Freemium

DOCUMENTATION

Find easy-to-follow documentation with detailed guides and support to help you use our products effectively. - Solutions

by technology

- Company

About Us

Apica keeps enterprises operating. The Ascent platform delivers intelligent data management to quickly find and resolve complex digital performance issues before they negatively impact the bottom line.Security

In a world in constant motion where threat actors are everywhere it is important to always improve the security in all parts of your organization. We believe that is done by leveraging industry best practices and adopting the latest technology. We are proud to be both ISO27001 and SOC2 certified and thus your data is safe and secure with us.News

Stay updated with the latest news and press releases, featuring key developments and industry insights.

Apica Launches Ascent Freemium to Democratize Intelligent Telemetry Data Management and Observability.

Leadership

Meet our leadership team, dedicated to driving innovation and success. Discover the visionaries behind our company’s growth and strategic direction.Apica Partner Network

Join the Apica Partner Network and collaborate with industry leaders to deliver cutting-edge solutions. Together, we drive innovation, growth, and success for our clients.Careers

Build your future with us! Explore exciting career opportunities in a dynamic environment that values innovation, teamwork, and professional growth. - Login

Get Started Free

Get Enterprise-Grade Data Management Without the Enterprise Price Tag Manage Your Data Smarter – Start for FreeLoad Test Portal

Ensure seamless performance with robust load testing on Apica’s Test Portal powered by InstaStore™. Optimize reliability and scalability with real-time insights.

Monitoring Portal

Access the Monitoring Portal (powered by InstaStore™) to view live system performance data, monitor key metrics, and quickly identify any issues to maintain optimal reliability and uptime.

Intelligent telemetry pipeline: Store, send and control your data.

100% Pipeline control to maximize data value.

Collect, optimize, store, transform, route, and replay your data – however, whenever and wherever you need it.

problem

Are you struggling with scale, missing features and cost?

Ballooning costs

Licensing budget spent on non-critical data while critical data gets lost in the noise.

Data growth

Unpredictable and growing data volumes.

Compliance headaches

Forced premature data decisions that put the organization at risk.

Data sprawl

Data pipeline control results in high project cost and data latency.

Inadequate security

Data noise distracts from the real security issues.

BENEFITS

We can help. See how.

Increase data quality

Integration powered by Intelligent Optimization

Compliance

Highly compliant data in your data streams

Telemetry

Only essential telemetry data is streamed leading to smaller indexes

AI/ML

Dynamic pattern recognition and data volume optimization

Instantaneous

100% data is indexed and ready for instant replay, search and reporting

Efficiency

Faster and accurate remediation of operations and security incidents

FEATURES

Controls In Your Hands

Take control of your data

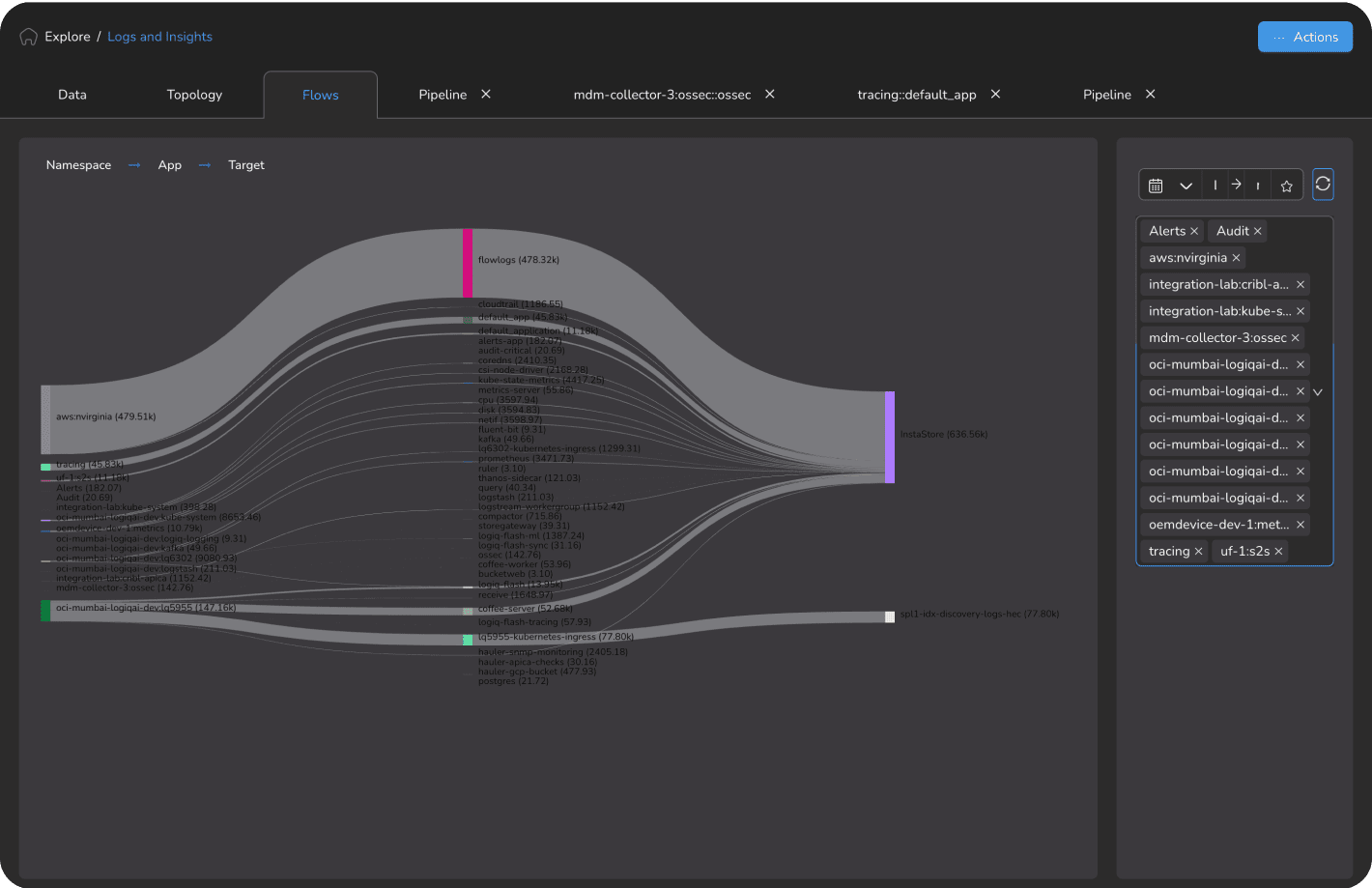

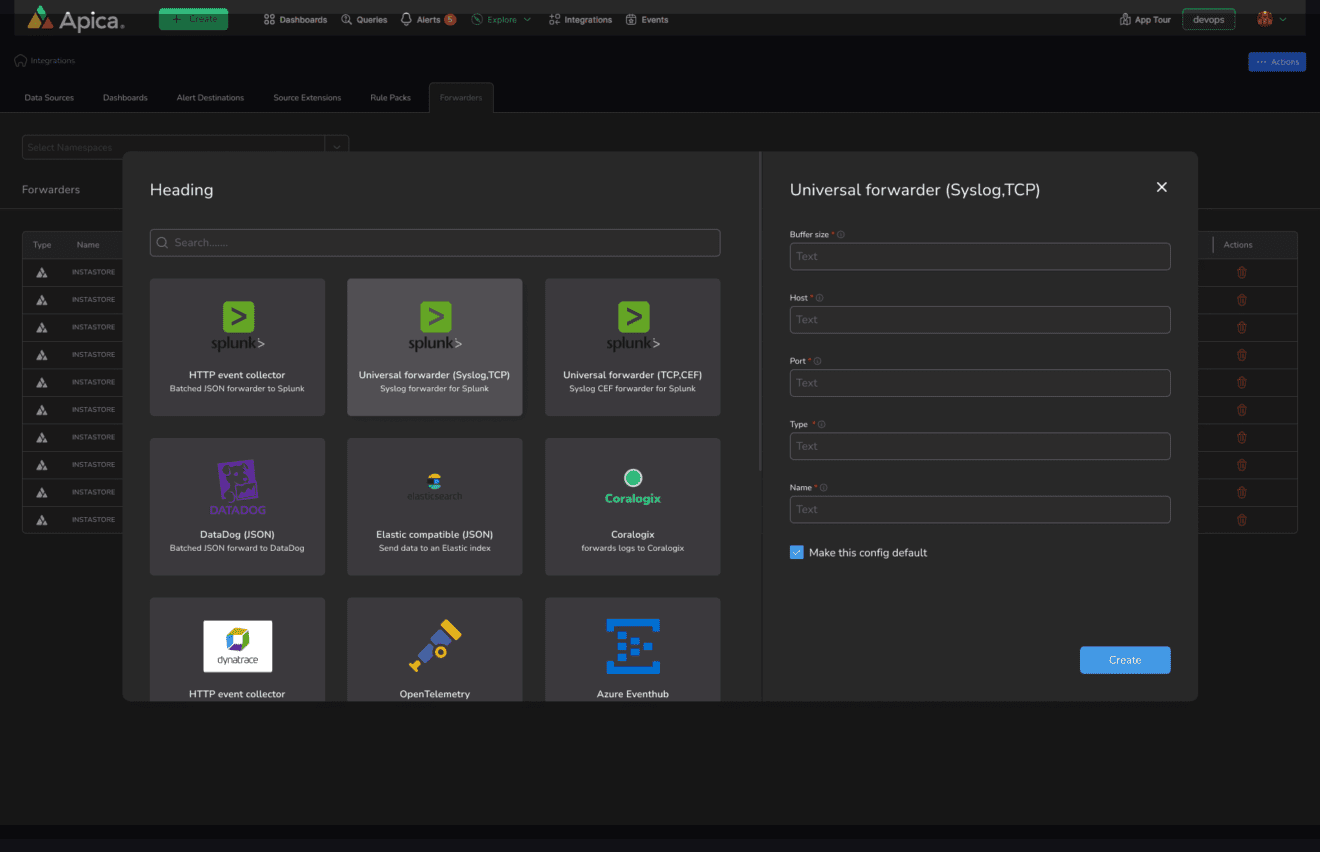

Rein all of your distributed telemetry and log data in using powerful constructs that aggregate logs from multiple sources. Improve data quality and forward your data to one or more destinations of your choice including popular platforms such as Splunk, Elastic, Kafka, Mongo etc.

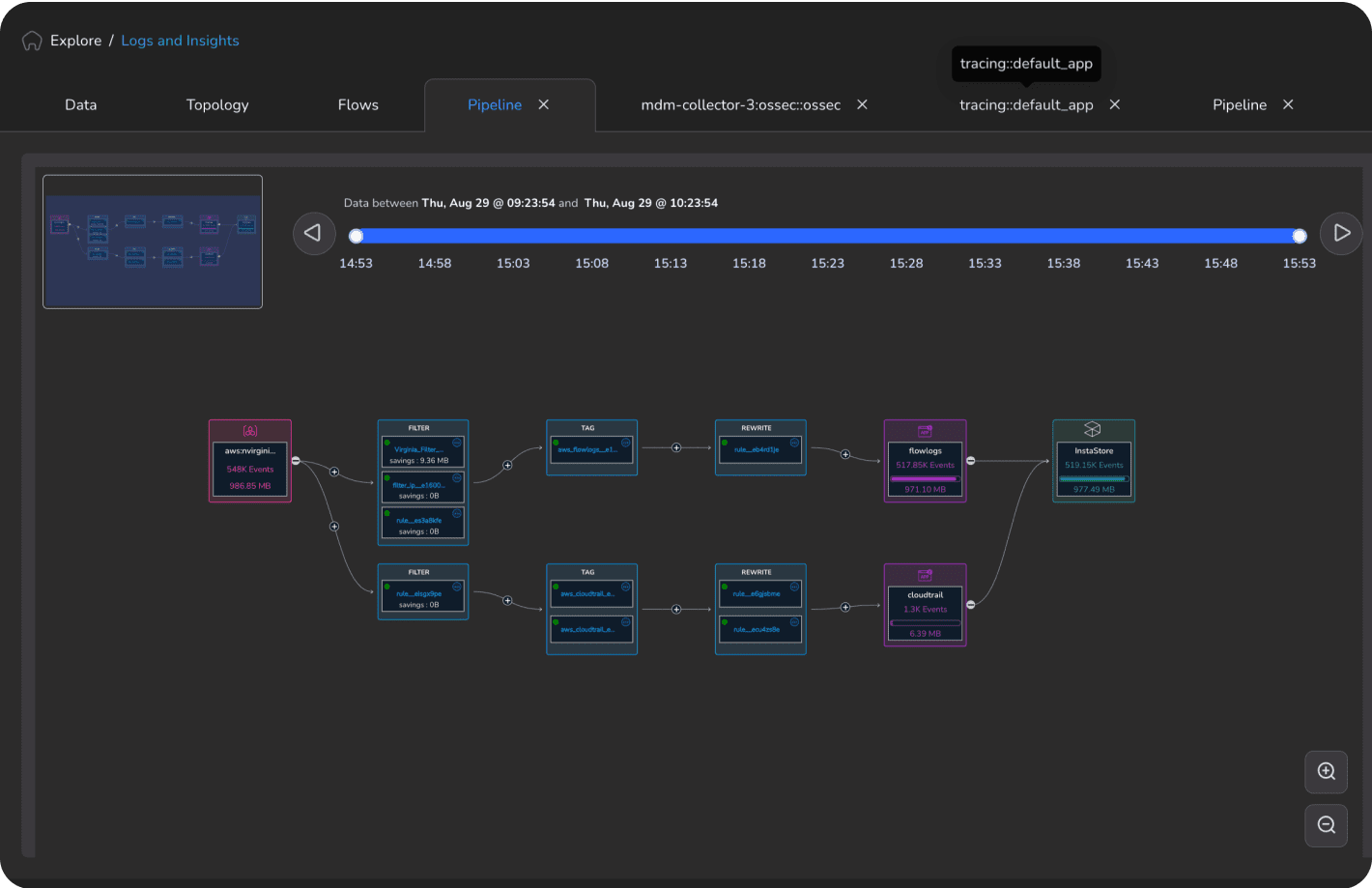

Build robust data pipelines

Flow fits right into your data pipeline to manage data operations. Our support for open standards such as JSON, Syslog, and RELP makes it easy to integrate into any pipeline.

Create data lakes

Create data lakes with highly relevant and customizable data partitions for optimal query performance. Use any S3-compatible store on any public or private cloud. Save more with the built-in data compression at rest.

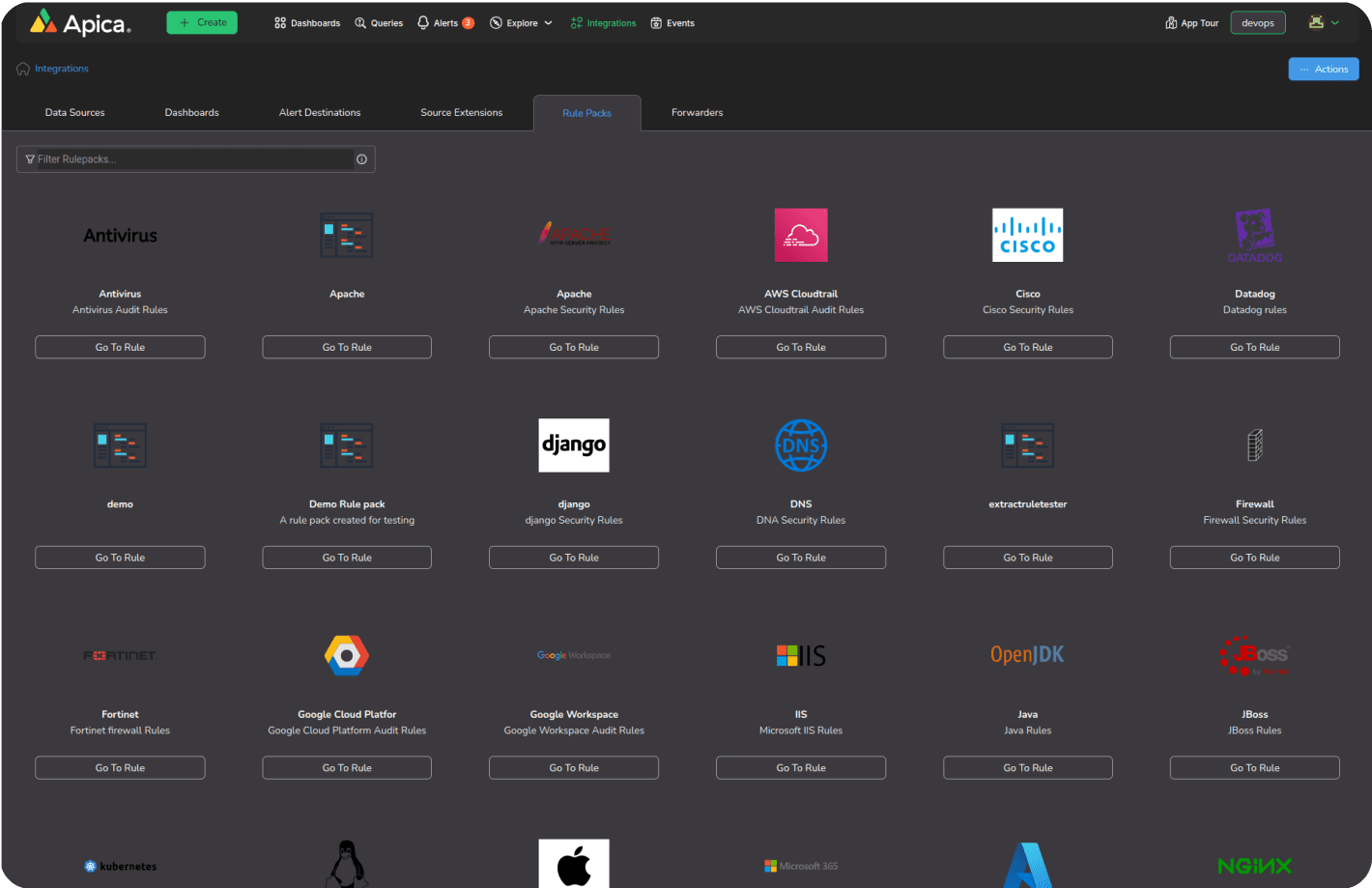

Smart rule packs for data optimization

User pre-built rule packs to optimize data flow into target systems. Rule packs bundle rules for data filtering, extraction, tagging, and rewrite. Rule packs include fine grained control and allow users to apply the entire pack pick and choose specific rules to create custom data optimization scenarios.

Trim off excess data

Reduce system costs and improve performance using powerful filters. Flow helps remove unwanted events and attributes from your log data that offer no real value.

Augment data attributes

Normalize your log data with additional attributes. Flow also ships with built-in Sigma SIEM rules so your logs can automatically be enhanced with security events that were detected.

Visualize data pipeline in real-time

Parse incoming log data to extract time-series metrics for anomaly detection and facilitating downstream dashboard creation, monitoring and log visualization.

INTEGRATIONS

*Trademarks belong to the respective owners.