In any modern containerized workload setting, container orchestration is imperative. Purportedly, the majority of contemporary applications make use of containers and micro-service-based architectures.

Google designed Kubernetes to tackle the problem across the board. They pioneered by introducing the Google Cloud Platform for hosting containers. However, if you keep up with the cloud world, you’ll know that since the inception of Kubernetes by google in 2014, its remarkable advantages have been overshadowed by the difficulty of managing the hardware and software, especially when it comes to the control plane components.

After Kubernetes had been out for a year, the GKE came along. Google was aware of the drawbacks and challenges a self-managed Kubernetes cluster presented. As a result, Google Kubernetes Engine was created, a Kubernetes environment on GCP where the control plane is primarily maintained by Google.

Even though GKE significantly simplified the process of setting up, configuring, and running your own Kubernetes cluster, a substantial amount of background information was still needed to build a production-ready environment. In the summer of 2019, Google released Google Cloud Run in recognition of this.

The complex infrastructure components that no developer wants to deal with were further abstracted by Google with Cloud Run. The data plane would be controlled, according to Cloud Run, who promised to get this Kubernetes-based product to the point where it can be referred to as “fully-managed.”

However, Google had to yet again release GKE Autopilot towards the end of February 2021 owing to Cloud Run’s low customizability and uncertain security due to excessive abstraction. GKE Autopilot claims to provide the best of both worlds, with the customizability and ease of maintenance of Cloud Run, as well as the freedom of standard GKE.

How does autopilot work?

The default, automated, and secure configuration of GKE is called Autopilot. Google is solely responsible for managing nodes and node pools. Both security upgrades and upkeep are automatic. When you configure a private cluster, networking is inherently pretty secure.

In principle, it is Kubernetes, but all you have to care about is setting up and operating your workloads and applications—not what platform they run on. You only need to set up your resource requests, limitations, and auto-scaling rules in terms of provisioning resources; Autopilot will take care of the rest. You won’t be charged for the nodes of the actual underlying cluster; rather, you’ll only be charged for the assets (CPU, RAM, storage) your pods are using at any given time.

The advantages of GKE Autopilot

Following are some of the benefits of GKE autopilot over traditional GKE:

- Control and data management

- Auto-repair, auto-health monitoring, and health checks

- There is no need to determine how much computing power your workloads will demand.

- You simply pay for the resources used by each pod, neither more nor less, therefore there are no more idle nodes. This functionality is really appealing to someone like me who has done a lot of work determining the proper resource requirements and restrictions for each application, but it also eliminates some stability with regard to pricing.

- Particularly when compared to the standard GKE defaults, the default security configurations match those of a production system. Here are some examples of the autopilot’s default settings:

- GKE shielded nodes are active: provides a cryptographic check to validate the identity of the node

- Node With local DNS caching enabled, cluster internal DNS resolutions are completed more quickly.

- Enhanced access control for pods and nodes with the help of workload identification

- The management of node auto-scaling, node auto-provisioning, and pod auto-scaling. Because of this, we can scale automatically at the pod, node, and even node pool levels.

The “mode of operation” of Google Kubernetes Engine (GKE) Autopilot is said to be able to cut down on management time and operational costs for Kubernetes. Enhancing GKE cluster configuration and management capabilities does this. The maintenance and provisioning of the control plane and nodes are mostly automated by Autopilot, which also adds security and compliance elements that GKE lacks.

Nevertheless, it could be argued that there’s more to the picture than meets the eye as Google is not providing a fully automated platform that could be used to manage service mesh, cluster deployments, and other related tasks so developers could upload their code to Kubernetes while the platform takes care of the rest. Google claims that Autopilot does, however, offload a lot of the time-consuming activities involved in establishing and managing Kubernetes clusters.

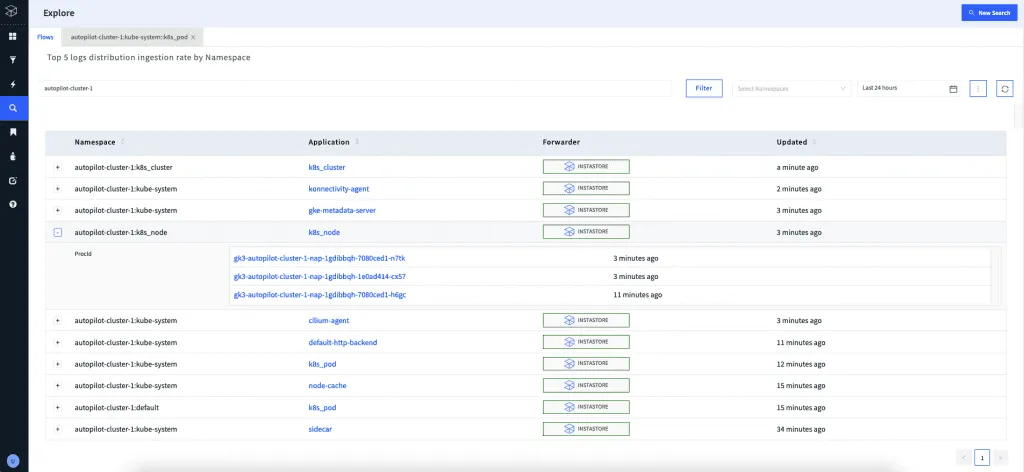

How Apica can enable gathering logs in Autopilot

To enable the GKE Autopilot cluster you need to first set up log forwarding from GCP Cloud Logging to Apica, for this, you must follow the steps below:

- Make a user-controlled service account: Activate the Cloud Shell and log in. For the VM, create a service account.

- Establish a Cloud Pub/Sub topic: Establish a Pub/Sub topic to which Cloud Logging will deliver events for Logstash to receive.

- Make a log sink and subscribe to the subject using Pub/Sub: To export logs to the new Pub/Sub topic, create a log sink. A reminder to confirm that the service account used by Cloud Logging has permission to publish events to the Pub/Sub topic is provided in the output’s second section.

- Create a virtual machine (VM) to run Logstash and deliver the logs it collects from the Pub/Sub logging sink to apica.io.

Depending on your requirements, you can adjust the namespace and cluster id in the Logstash setup. Your Apica instance will now receive your GCP Cloud Logging logs. Moreover, the service account token must also be provided in the logstash configuration if you are running it on a virtual machine that is not hosted by GCE.

Should you consider it?

The Kubernetes ecosystem is much better with GKE autopilot. There are a lot of cases that would normally be suitable for Cloud Run to be more compatible with GKE autopilot. Standard GKE would be an excellent option if you have some expertise in controlling the data plane of a Kubernetes setting and would like to preserve control over resource usage and a specific level of stability in your system.

Cloud Run would likely be the quickest and easiest choice if you wanted to completely concentrate on development, not have to bother about the underlying infrastructure, and had few security restrictions.

GKE Autopilot, on the other hand, would be the best option if you wanted to take advantage of both of the aforementioned services, i.e., a controlled control and data plane with a good security posture and lots of customization options.