If you’re using an observability stack, chances are you’re familiar with alerting. Alerts help users get notified when something interesting happens that they need to act on. Alerting comes with its challenges when too many alerts become a problem and not the solution. Is your team stuck with alert fatigue? It is a very real problem, and it’s not uncommon for observability solutions to generate false alerts. This can lead to administrators spending invaluable time handling false notifications.

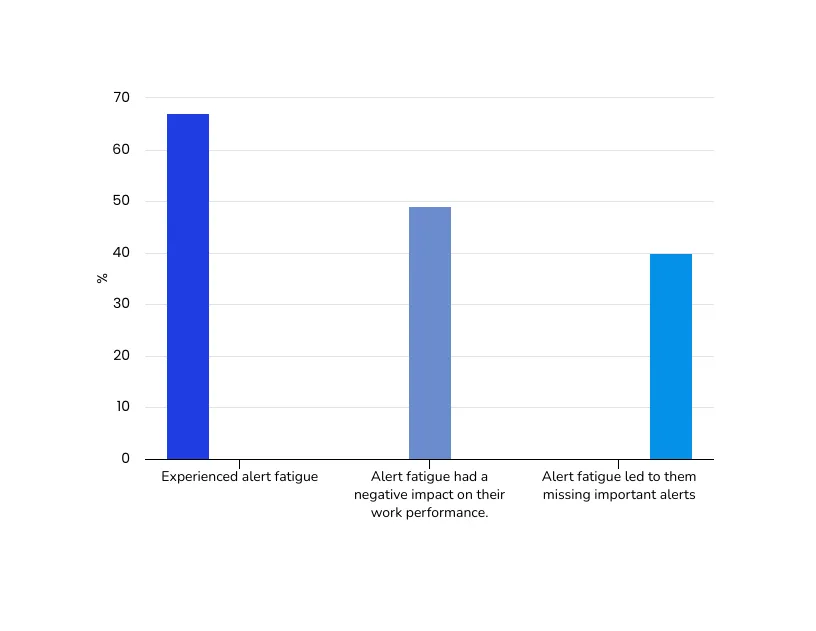

Here are some stats on alert fatigue:

- In a study of 200 respondents by Ponemon Institute, 67% said they experienced alert fatigue.

- Of those surveyed, 49% said alert fatigue had a negative impact on their work performance.

- 40% of those surveyed said alert fatigue led to them missing important alerts.

What causes alert fatigue?

Alert fatigue can stem from multiple related problems – misconfiguration of alert rules, using simple techniques like thresholds, etc. Is your team struggling with alert fatigue? If so don’t despair! There are ways to combat alert fatigue. By using more sophisticated techniques like machine learning, you can reduce the number of false alerts and make your observability stack work better for you.

Here are some common reasons why alerts generate noise:

Lack of context: Without context, it can be difficult to determine whether an alert is valid or not. This is often due to poorly configured alert rules.

Seasonality: Understanding patterns of alert and alert frequencies can help eliminate unwanted noise. For e.g. it may be common for some alerts to trigger based on thresholds during specific times of the day. Understanding such seasonality is critical in eliminating what is a real issue vs what is something that is likely something that should be stored purely for audit purposes.

Simple thresholding: Thresholding is a common technique used to generate alerts, but it can often lead to false positives. A threshold does not handle the following common situations:

- Anomalies: If there is an anomaly in the data, the threshold may not be able to detect it.

- Gradual changes: If the data changes gradually over time, the threshold may not be able to detect it.

- Spikes: If there is a spike in the data, the threshold may not be able to detect it.

Inconsistent alerting: If alerting rules are inconsistently applied, it can be difficult to determine which alerts are valid and which are not.

Combating alert noise with autonomous approaches

There are many ways to combat alert fatigue, but using machine learning is one of the most effective. By using more sophisticated techniques, you can help reduce the number of false alerts and make your observability stack work better for you. Here are some machine learning techniques that can help:

Anomaly detection: By using anomaly detection, you can identify unusual behavior that may indicate a problem.

Correlation: Correlation can help you identify relationships between different data points that may be causing an issue.

Classification: Classification can help you group data points into categories so that you can more easily identify which alerts are valid and which are not.

Regression: Regression can help you identify trends over time that may be indicative of a problem.

Conclusion

In summary, alert fatigue is a real problem that can lead to wasted time and missed opportunities. But by using machine learning, you can help reduce the number of false alerts and make your observability stack work better for you. apica.io’s anomaly detection engine can significantly reduce alert fatigue for you by using sophisticated machine learning techniques. Visit us today to learn more!