Right, a few months ago, few of us came together with one mission: To give log analytics its much-desired wings. BTW It’s not just AWS S3; you can use any S3 compatible store.

Logging is cumbersome, so we built the Apica platform to bring easy logging to the masses. A platform that is so easy you can be up and running in less than 15 minutes. Send logs from Kubernetes or on-prem servers or cloud virtual machines with ease.

What If

- you can retain your logs forever

- you don’t have to worry about how many gigabytes of data you produce

- you can get all crucial events out of logs without a single query

- you can access all of it using a simple CLI or a set of API’s

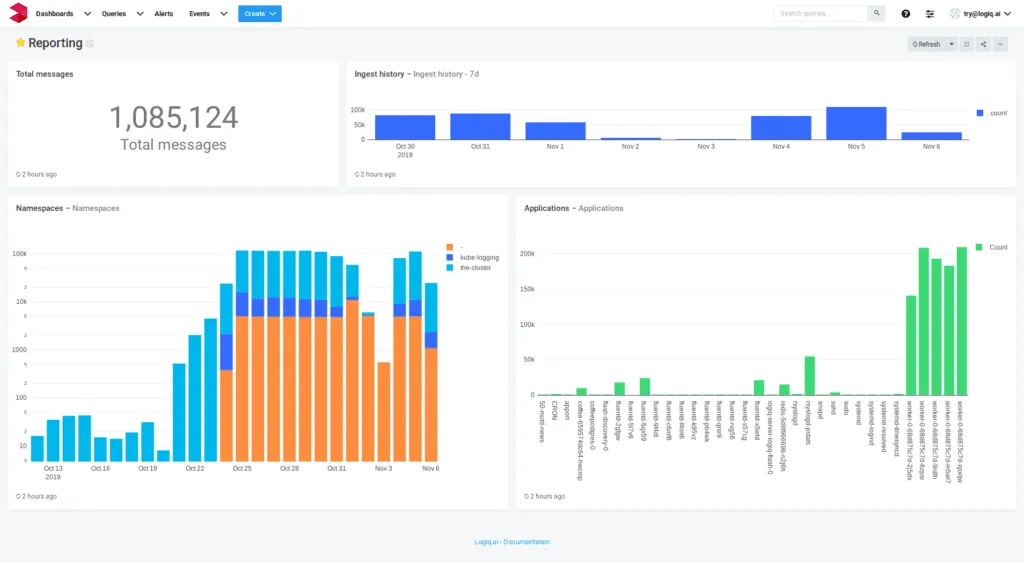

- you can build cool real-time dashboards

And what if all of this comes with a fixed cost.

How Do We Do It?

We use S3 (or S3 compatible storage) as our primary storage for data at rest. Apica platform exposes protocol endpoints – Syslog, RELP, MQTT. You can use your favorite log aggregation agent (Syslog/ rsyslog/ Fluentd/Fluent-bit/ Logstash) to send the data to Apica protocol endpoints. (oh we have REST endpoints too). Our platform indexes all the data as we ingest. Knowing where the data is, makes it easier to serve your queries. Use our CLI/REST/GRPC endpoints to get data, and it is served directly from S3. Yes, “The Wings”

Dashboards, Covered!

If you think it’s just about the CLI and API’s, we have cool customizable dashboards too and a powerful SQL interface to dig deep into your logs.

Real-time, Covered!

Want to know what is happening in your servers real-time, yeah use our CLI to tail logs from servers in real-time. (just like you do a tail -f)

> Apicabox tail -h 10.123.123.24 -a apache

What we solve?

Most of the solutions out there hold data in proprietary databases that use disks and volumes to store their data. What’s wrong with that? Well, disks and volumes need to be monitored, managed, replicated, and sized. Put clustering in the mix, and your log analytics project is now managing someone else’s software and storage nightmare.

With S3 compatible storage, you abstract out your storage with an API. 10G, 100G, 1TB, 10TB, it’s no different.