- Solutions

INTEGRATIONS

Integrate with any data source, notify any service, authenticate your way, and automate everything.

- Products

Apica Product Overview

Apica helps you simplify telemetry data management and control observability costs.Fleet Management transforms the traditional, static method of telemetry into a dynamic, flexible system tailored to your unique operational needs. It offers a nuanced approach to observability data collection, emphasizing efficiency and adaptability.100% pipeline control to maximize data value while reducing observability spend by 40%. Collect, optimize, store, transform, route, and replay your observability data — however, whenever, and wherever you need it.Apica’s data lake (powered by InstaStore™), a patented single-tier storage platform that seamlessly integrates with any object storage. It fully indexes incoming data, providing uniform, on-demand, and real-time access to all information.The most comprehensive and user-friendly platform in the industry. Gain real-time insights into every layer of your infrastructure with automatic anomaly detection and root cause analysis. - Resources

Videos

Dive into valuable discussions and get to know our company through exclusive video content.Events & Webinars

Join us for live and virtual events featuring expert insights, customer stories, and partner connections. Don’t miss out on valuable learning opportunities!

DOCUMENTATION

Find easy-to-follow documentation with detailed guides and support to help you use our products effectively. - Company

About Us

Apica keeps enterprises operating. The Ascent platform delivers intelligent data management to quickly find and resolve complex digital performance issues before they negatively impact the bottom line.Security

In a world in constant motion where threat actors are everywhere it is important to always improve the security in all parts of your organization. We believe that is done by leveraging industry best practices and adopting the latest technology. We are proud to be both ISO27001 and SOC2 certified and thus your data is safe and secure with us.News

Stay updated with the latest news and press releases, featuring key developments and industry insights.

Leadership

Meet our leadership team, dedicated to driving innovation and success. Discover the visionaries behind our company’s growth and strategic direction.Apica Partner Network

Join the Apica Partner Network and collaborate with industry leaders to deliver cutting-edge solutions. Together, we drive innovation, growth, and success for our clients.Careers

Build your future with us! Explore exciting career opportunities in a dynamic environment that values innovation, teamwork, and professional growth. - Login

Get Started Free

Get Enterprise-Grade Data Management Without the Enterprise Price Tag Manage Your Data Smarter – Start for FreeLoad Test Portal

Ensure seamless performance with robust load testing on Apica’s Test Portal powered by InstaStore™. Optimize reliability and scalability with real-time insights.

Monitoring Portal

Access the Monitoring Portal (powered by InstaStore™) to view live system performance data, monitor key metrics, and quickly identify any issues to maintain optimal reliability and uptime.

AI and LLM Observability

Optimize, Secure and Explain AI Systems with Full-Stack Observability

Comprehensive AI and LLM Observability

Ensure peak performance, reliability, and compliance for your Generative AI applications, Large Language Models (LLMs), and AI-driven agents with Ascent, Apica’s intelligent observability platform.

Seamless Integration Across AI Ecosystems

Apica Ascent integrates with the entire AI stack, supporting:

- OpenAI, Anthropic, Cohere, Mistral, HuggingFace, and more

- Cloud AI platforms: Azure OpenAI, Google AI Studio, Amazon Bedrock, Vertex AI

- On-premises and open-source models like Ollama and GPT4All

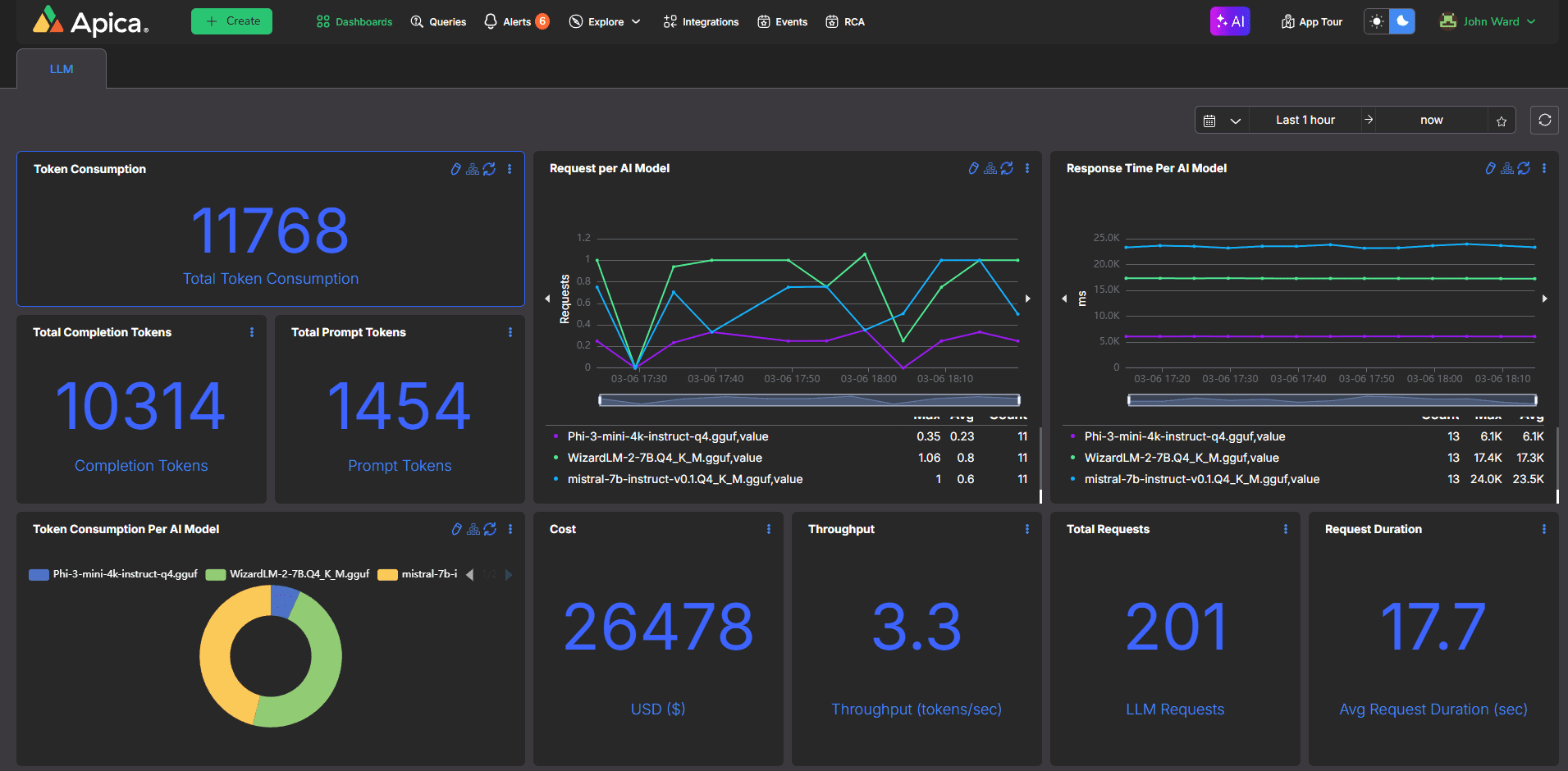

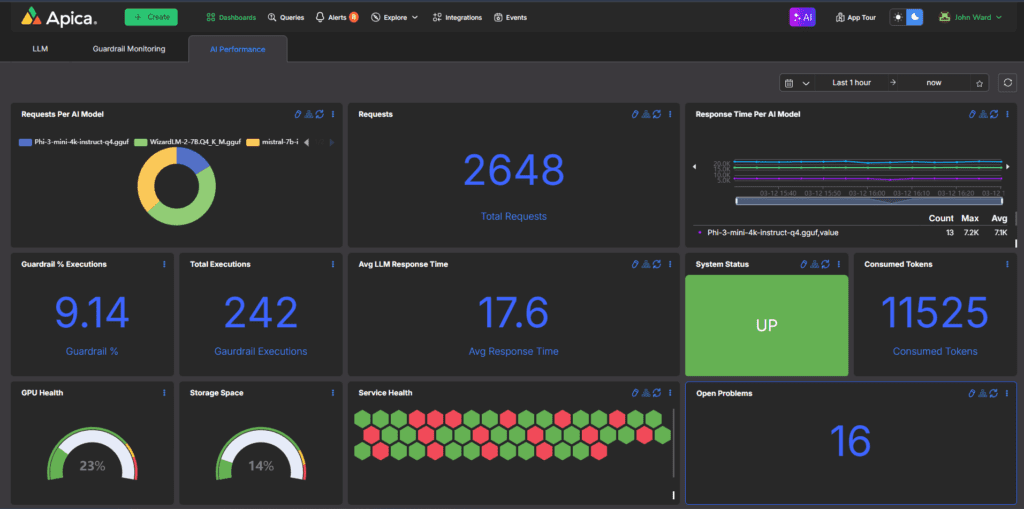

Optimize AI Performance & Cost Reduction

- Monitor token costs, request latency, and system performance in real time with intuitive dashboards

- Leverage AI-driven insights to predict and mitigate cost spikes before they impact budgets

- Pinpoint slow responses, errors, and inefficiencies in LLM interactions with trace analysis

- Automate workflows to maintain optimal AI performance and reliability

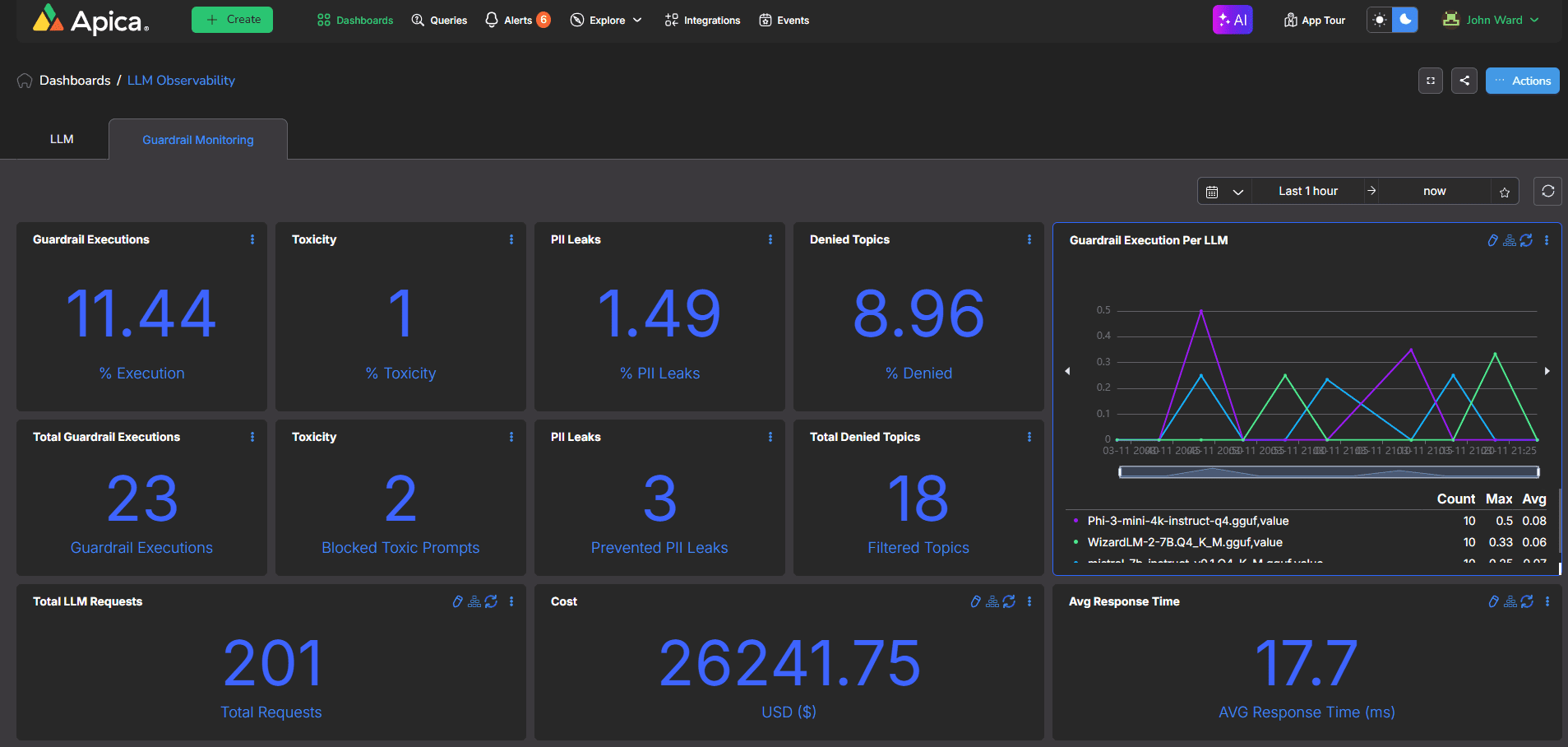

Enhance AI Trust & Security

- Detect hallucinations, bias, and prompt injection attacks before they cause harm

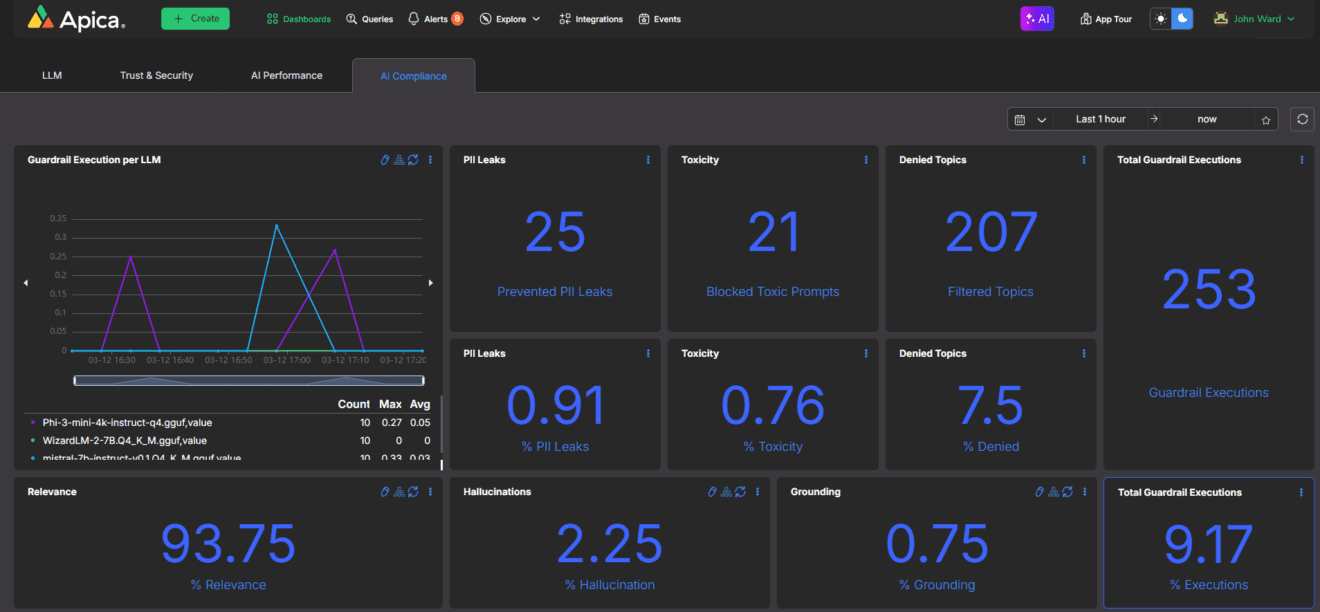

- Prevent PII leakage, toxicity, and compliance violations with automated guardrail monitoring

- Strengthen AI governance with real-time visibility into model behaviors and security risks

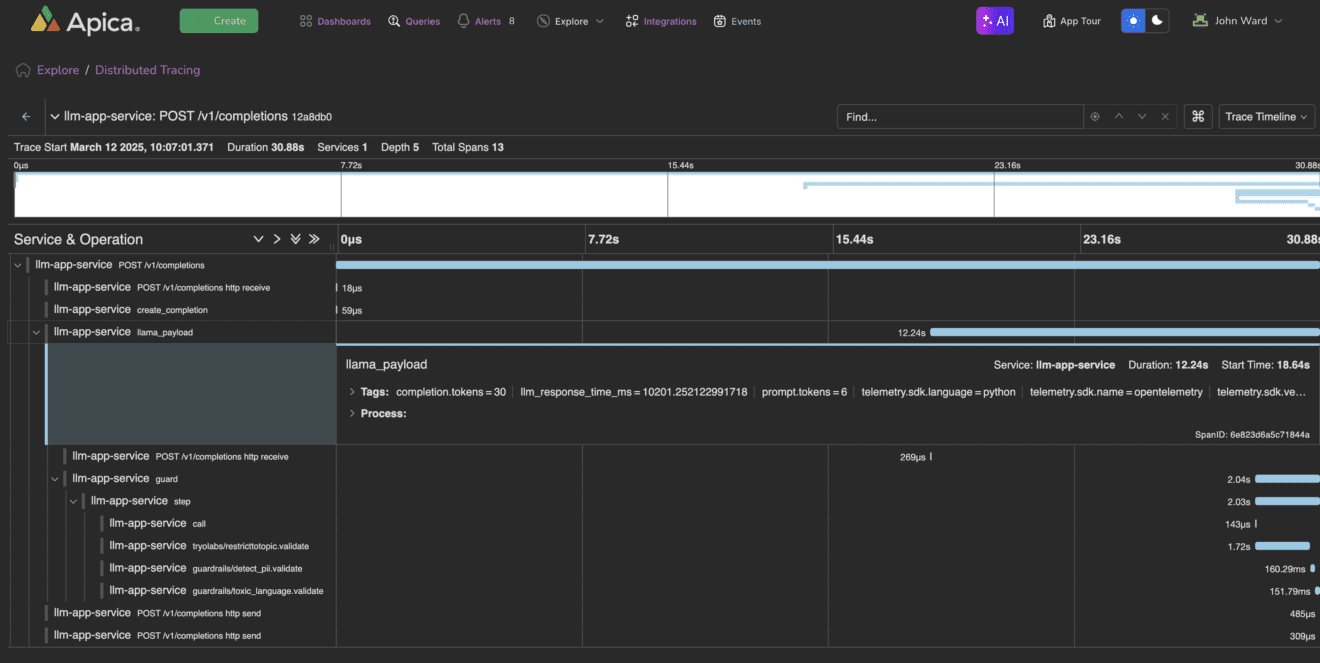

Explainability & End-to-End AI Traceability

- Gain full visibility into AI request execution, spanning orchestration, caching, and model layers

- Track dependencies across LLMs, Retrieval-Augmented Generation (RAG), and AI agents

- Use AI-powered root cause analysis to resolve failures before they impact users

Ensure AI Compliance & Sustainability

- Maintain a full audit trail of inputs and outputs for regulatory adherence

- Visualize AI performance and behaviors to prove compliance

- Monitor infrastructure efficiency to support carbon-reduction initiatives